DALL·E: Introducing Outpainting

Extend creativity and tell a bigger story with DALL-E images of any size Original outpainting by Emma Catnip Today we’re introducing Outpainting, a new feature which helps users extend their creativity by continuing an image beyond its original borders — adding visual elements in the same style, or taking a story in new directions —…

Read More

Our Approach to Alignment Research

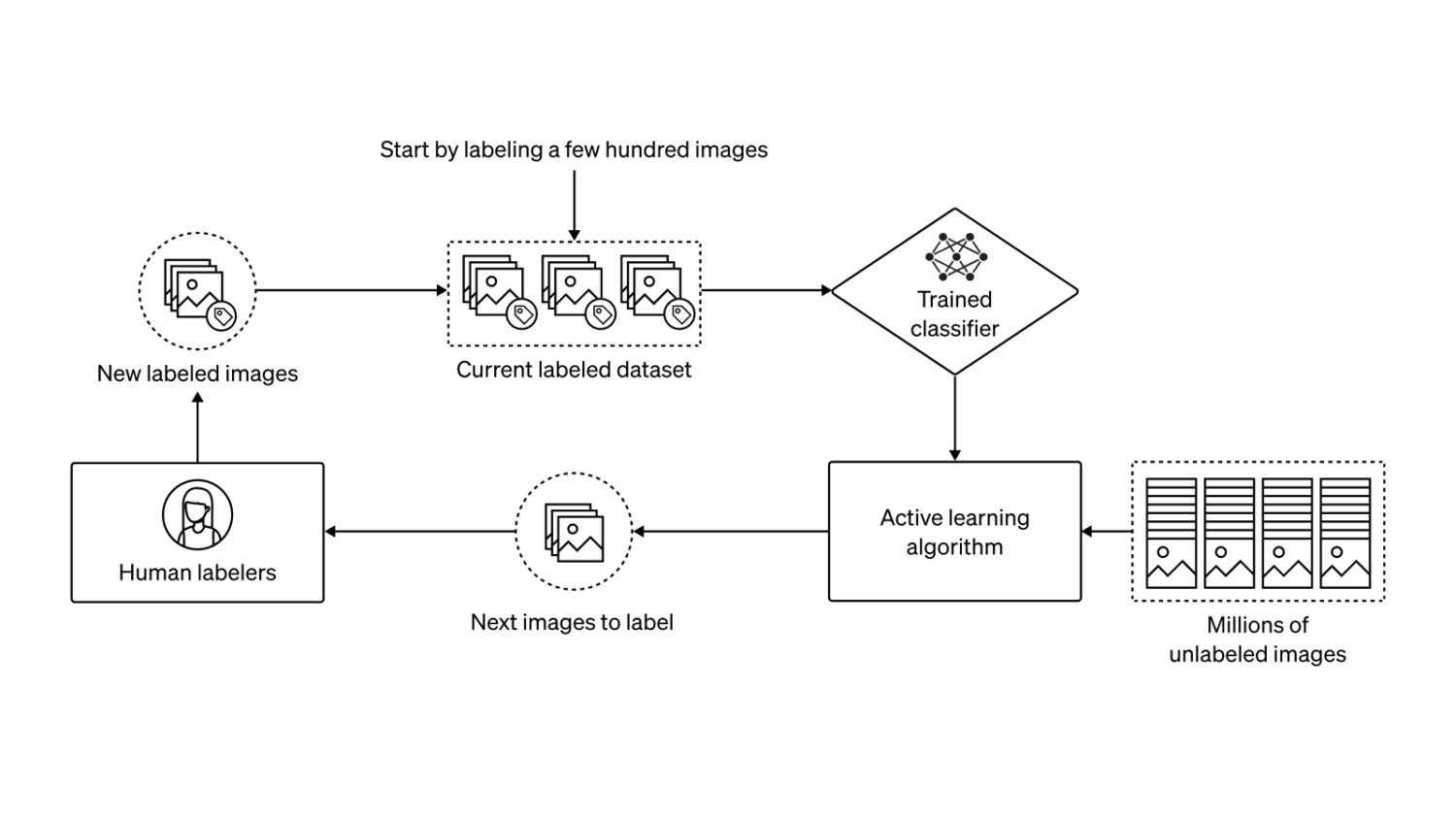

Our approach to aligning AGI is empirical and iterative. We are improving our AI systems’ ability to learn from human feedback and to assist humans at evaluating AI. Our goal is to build a sufficiently aligned AI system that can help us solve all other alignment problems. Introduction Our alignment research aims to make artificial…

Read MoreNew and Improved Content Moderation Tooling

We are introducing a new and improved content moderation tool: The Moderation endpoint improves upon our previous content filter, and is available for free today to OpenAI API developers. To help developers protect their applications against possible misuse, we are introducing the faster and more accurate Moderation endpoint. This endpoint provides OpenAI API developers with…

Read More

DALL·E Now Available in Beta

We’ll invite 1 million people from our waitlist over the coming weeks. Users can create with DALL·E using free credits that refill every month, and buy additional credits in 115-generation increments for $15. Join DALL·E 2 waitlist DALL·E, the AI system that creates realistic images and art from a description in natural language, is now…

Read More

Reducing Bias and Improving Safety in DALL·E 2

Today, we are implementing a new technique so that DALL·E generates images of people that more accurately reflect the diversity of the world’s population. This technique is applied at the system level when DALL·E is given a prompt describing a person that does not specify race or gender, like “firefighter.” Based on our internal evaluation,…

Read More

DALL·E 2: Extending Creativity

As part of our DALL·E 2 research preview, more than 3,000 artists from more than 118 countries have incorporated DALL·E into their creative workflows. The artists in our early access group have helped us discover new uses for DALL·E and have served as key voices as we’ve made decisions about DALL·E’s features. Creative professionals using…

Read More

DALL·E 2 Pre-Training Mitigations

In order to share the magic of DALL·E 2 with a broad audience, we needed to reduce the risks associated with powerful image generation models. To this end, we put various guardrails in place to prevent generated images from violating our content policy. This post focuses on pre-training mitigations, a subset of these guardrails which…

Read More

Learning to Play Minecraft with Video PreTraining (VPT)

We trained a neural network to play Minecraft by Video PreTraining (VPT) on a massive unlabeled video dataset of human Minecraft play, while using only a small amount of labeled contractor data. With fine-tuning, our model can learn to craft diamond tools, a task that usually takes proficient humans over 20 minutes (24,000 actions). Our…

Read More