Google at EMNLP 2022

Posted by Malaya Jules, Program Manager, Google This week, the premier conference on Empirical Methods in Natural Language Processing (EMNLP 2022) is being held in Abu Dhabi, United Arab Emirates. We are proud to be a Diamond Sponsor of EMNLP 2022, with Google researchers contributing at all levels. This year we are presenting over 50…

Read More

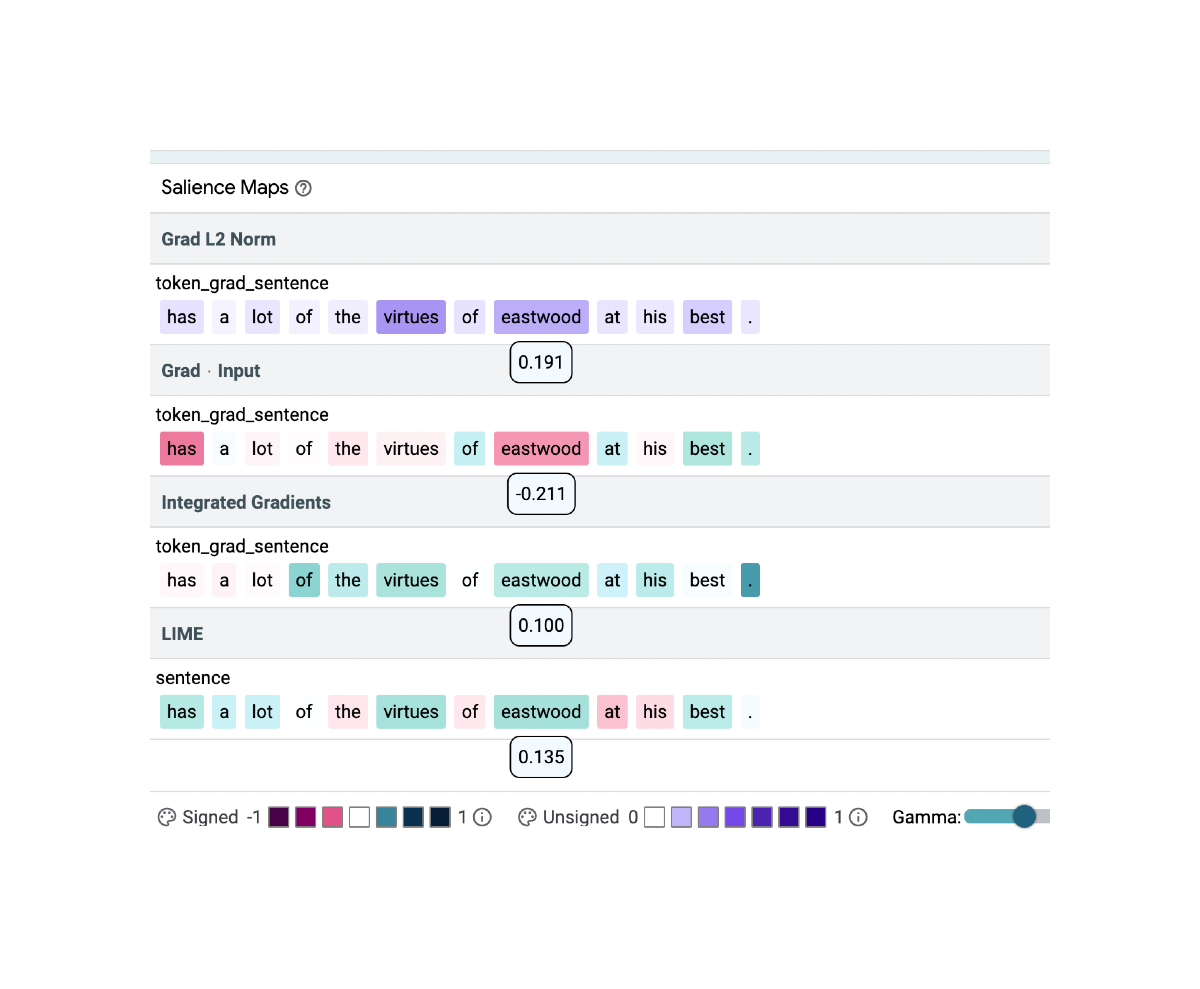

Will You Find These Shortcuts?

Posted by Katja Filippova, Research Scientist, and Sebastian Ebert, Software Engineer, Google Research, Brain team Modern machine learning models that learn to solve a task by going through many examples can achieve stellar performance when evaluated on a test set, but sometimes they are right for the “wrong” reasons: they make correct predictions but use…

Read More

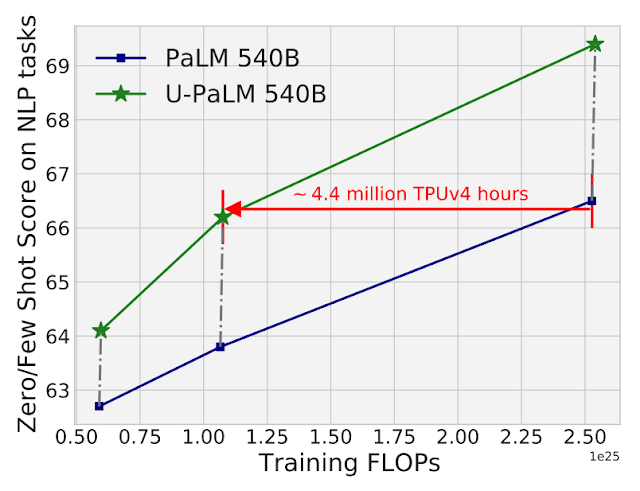

Better Language Models Without Massive Compute

Posted by Jason Wei and Yi Tay, Research Scientists, Google Research, Brain Team In recent years, language models (LMs) have become more prominent in natural language processing (NLP) research and are also becoming increasingly impactful in practice. Scaling up LMs has been shown to improve performance across a range of NLP tasks. For instance, scaling…

Read More

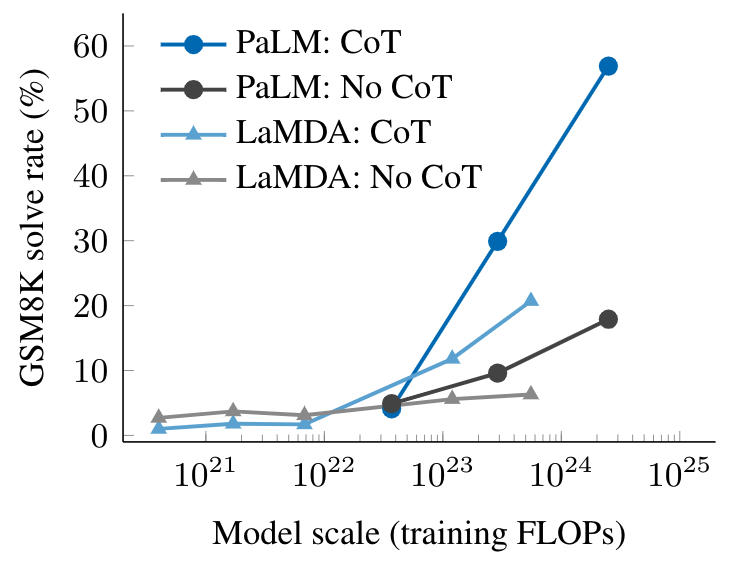

Characterizing Emergent Phenomena in Large Language Models

Posted by Jason Wei and Yi Tay, Research Scientists, Google Research, Brain Team The field of natural language processing (NLP) has been revolutionized by language models trained on large amounts of text data. Scaling up the size of language models often leads to improved performance and sample efficiency on a range of downstream NLP tasks….

Read More