Accelerating Text Generation with Confident Adaptive Language Modeling (CALM)

Posted by Tal Schuster, Research Scientist, Google Research Language models (LMs) are the driving force behind many recent breakthroughs in natural language processing. Models like T5, LaMDA, GPT-3, and PaLM have demonstrated impressive performance on various language tasks. While multiple factors can contribute to improving the performance of LMs, some recent studies suggest that scaling…

Read More

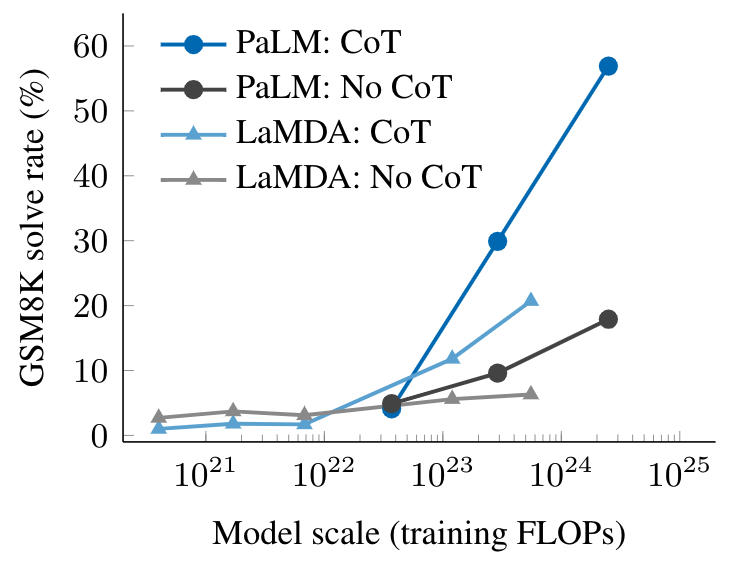

Characterizing Emergent Phenomena in Large Language Models

Posted by Jason Wei and Yi Tay, Research Scientists, Google Research, Brain Team The field of natural language processing (NLP) has been revolutionized by language models trained on large amounts of text data. Scaling up the size of language models often leads to improved performance and sample efficiency on a range of downstream NLP tasks….

Read MoreNew and Improved Content Moderation Tooling

We are introducing a new and improved content moderation tool: The Moderation endpoint improves upon our previous content filter, and is available for free today to OpenAI API developers. To help developers protect their applications against possible misuse, we are introducing the faster and more accurate Moderation endpoint. This endpoint provides OpenAI API developers with…

Read More