Generative AI: Navigating Innovation and Responsibility in the New Era

In the ever-evolving landscape of artificial intelligence (AI), the emergence of generative AI has ignited both excitement and apprehension. Unlike traditional AI, which largely relied on specialized teams and resources, generative AI democratizes the power of machine learning. This shift, while brimming with innovation, comes with newfound responsibilities and risks that have set off a…

Read More

Exploring the Creative Landscape of Generative AI: Unleashing Innovation and Possibilities

In the ever-evolving realm of artificial intelligence (AI), a new frontier is emerging—one that holds immense promise and intrigue. Generative AI, a groundbreaking concept, is transforming the way we interact with technology, create content, and innovate across industries. But what exactly is generative AI, and why is it sparking such excitement and curiosity? Decoding Generative…

Read More

Beyond Technology: The Crucial Integration of AI Strategy with Business Strategy

Conventional wisdom often pegs AI as a technological marvel, fostering the belief that its implementation revolves around technological considerations alone. Yet, the most striking revelation from this study is that AI triumphs not because of technological prowess, but because of its seamless integration into overall business strategy. Unlike many companies that approach AI through an…

Read More

Why watermarking AI-generated content won’t guarantee trust online

In late May, the Pentagon appeared to be on fire. A few miles away, White House aides and reporters scrambled to figure out whether a viral online image of the exploding building was in fact real. It wasn’t. It was AI-generated. Yet government officials, journalists, and tech companies were unable to take action before the…

Read More

Why it’s impossible to build an unbiased AI language model

This story originally appeared in The Algorithm, our weekly newsletter on AI. To get stories like this in your inbox first, sign up here. AI language models have recently become the latest frontier in the US culture wars. Right-wing commentators have accused ChatGPT of having a “woke bias,” and conservative groups have started developing their own…

Read More

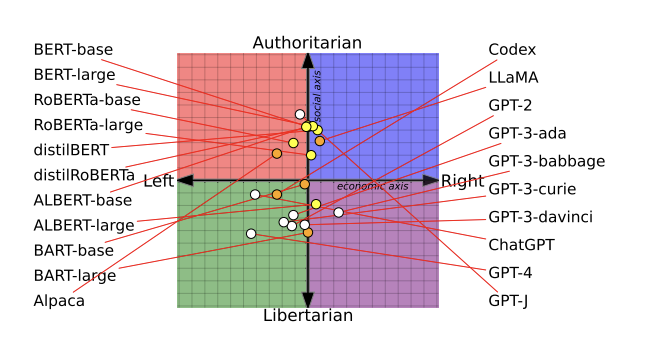

AI language models are rife with political biases

Should companies have social responsibilities? Or do they exist only to deliver profit to their shareholders? If you ask an AI you might get wildly different answers depending on which one you ask. While OpenAI’s older GPT-2 and GPT-3 Ada models would advance the former statement, GPT-3 Da Vinci, the company’s more capable model, would…

Read More

These new tools could help protect our pictures from AI

This story originally appeared in The Algorithm, our weekly newsletter on AI. To get stories like this in your inbox first, sign up here. Earlier this year, when I realized how ridiculously easy generative AI has made it to manipulate people’s images, I maxed out the privacy settings on my social media accounts and swapped…

Read More

AI builds momentum for smarter health care

The pharmaceutical industry operates under one of the highest failure rates of any business sector. The success rate for drug candidates entering capital Phase 1 trials—the earliest type of clinical testing, which can take 6 to 7 years—is anywhere between 9% and 12%, depending on the year, with costs to bring a drug from discovery…

Read More

This new tool could protect your pictures from AI manipulation

Remember that selfie you posted last week? There’s currently nothing stopping someone taking it and editing it using powerful generative AI systems. Even worse, thanks to the sophistication of these systems, it might be impossible to prove that the resulting image is fake. The good news is that a new tool, created by researchers at…

Read More