We’ve trained and are open-sourcing a neural net called Whisper that approaches human level robustness and accuracy on English speech recognition.

View Code

View Model Card

Whisper examples:

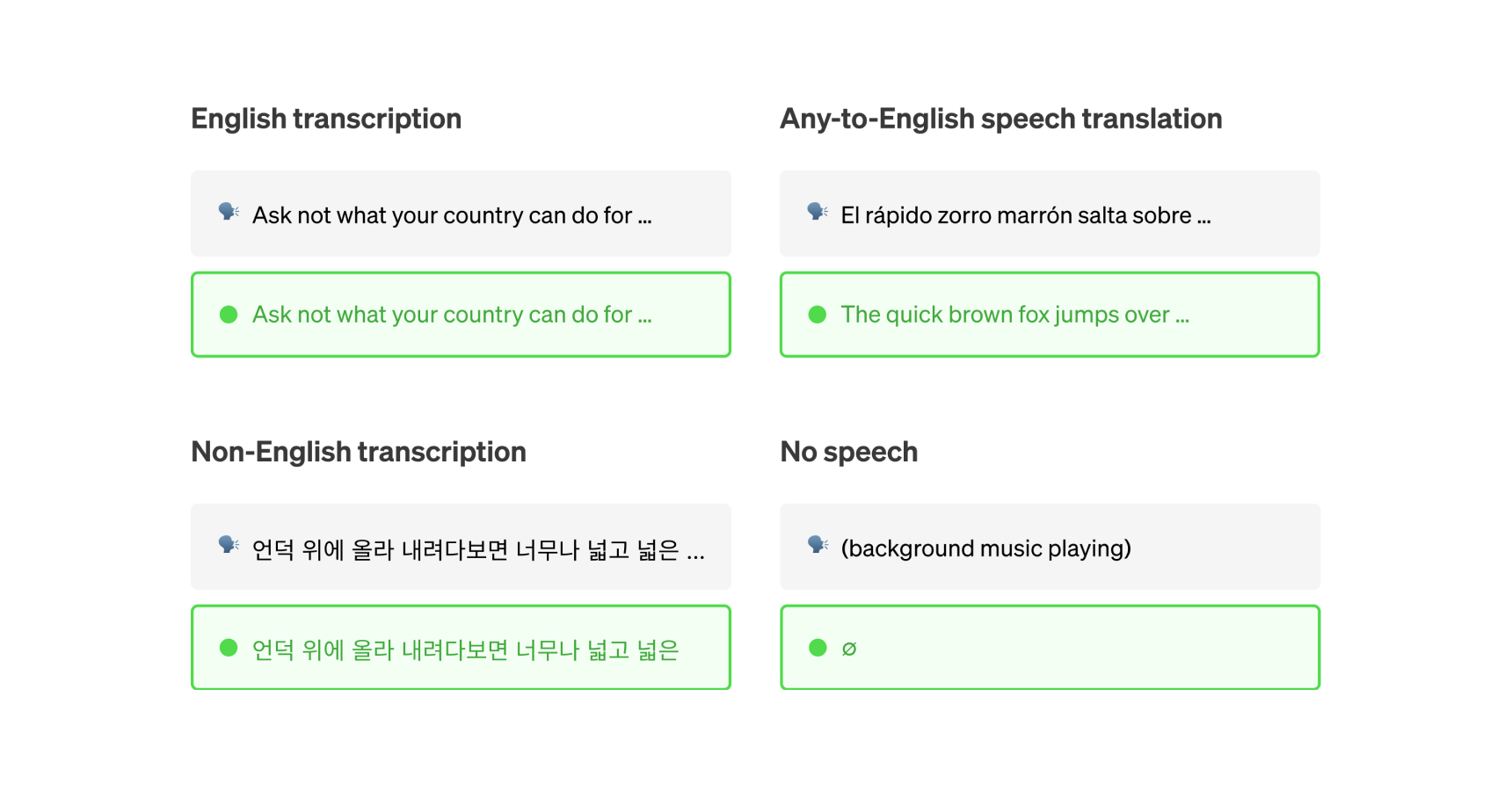

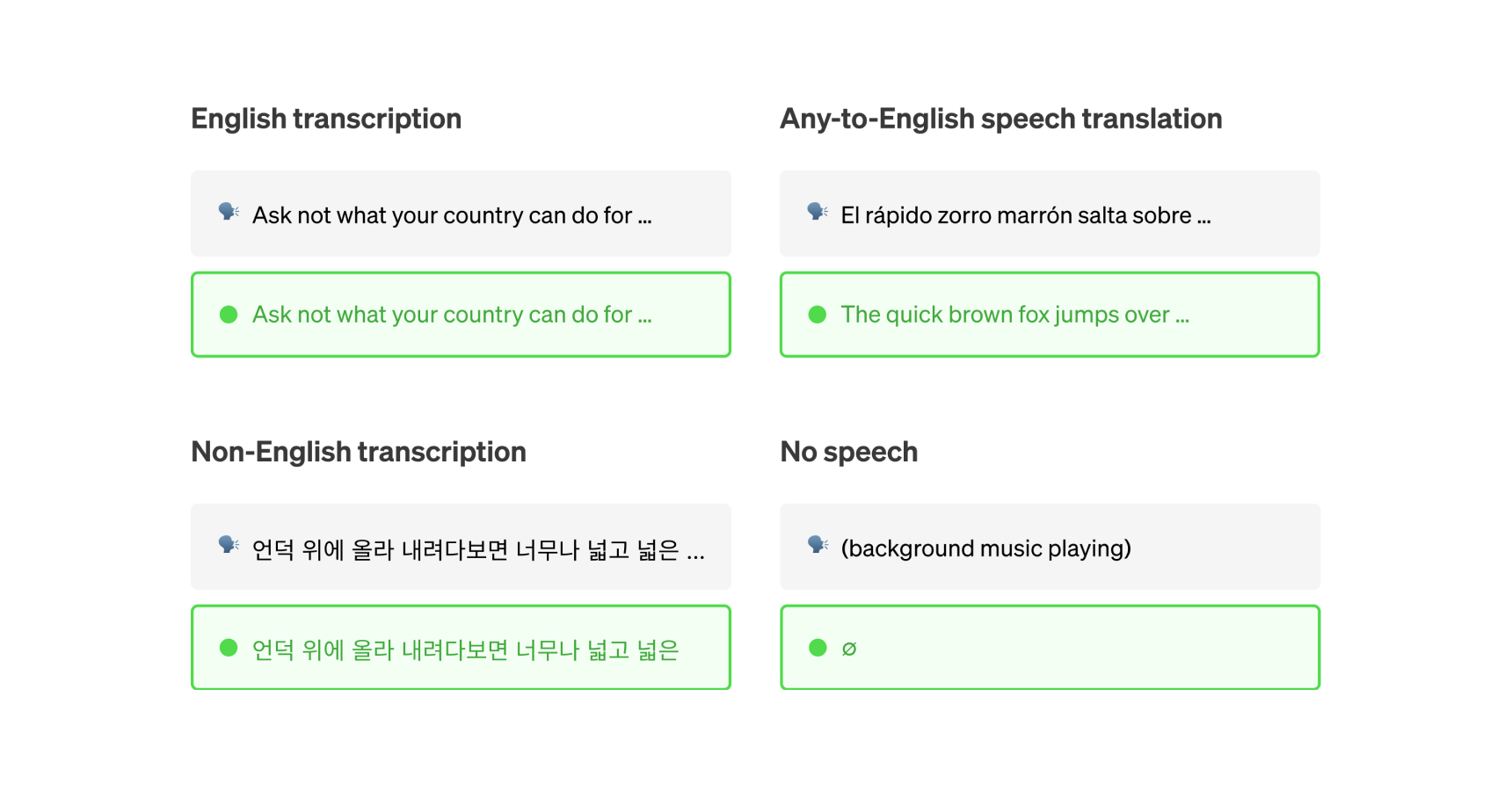

Whisper is an automatic speech recognition (ASR) system trained on 680,000 hours of multilingual and multitask supervised data collected from the web. We show that the use of such a large and diverse dataset leads to improved robustness to accents, background noise and technical language. Moreover, it enables transcription in multiple languages, as well as translation from those languages into English. We are open-sourcing models and inference code to serve as a foundation for building useful applications and for further research on robust speech processing.

The Whisper architecture is a simple end-to-end approach, implemented as an encoder-decoder Transformer. Input audio is split into 30-second chunks, converted into a log-Mel spectrogram, and then passed into an encoder. A decoder is trained to predict the corresponding text caption, intermixed with special tokens that direct the single model to perform tasks such as language identification, phrase-level timestamps, multilingual speech transcription, and to-English speech translation.

Other existing approaches frequently use smaller, more closely paired audio-text training datasets, or use broad but unsupervised audio pretraining. Because Whisper was trained on a large and diverse dataset and was not fine-tuned to any specific one, it does not beat models that specialize in LibriSpeech performance, a famously competitive benchmark in speech recognition. However, when we measure Whisper’s zero-shot performance across many diverse datasets we find it is much more robust and makes 50% fewer errors than those models.

About a third of Whisper’s audio dataset is non-English, and it is alternately given the task of transcribing in the original language or translating to English. We find this approach is particularly effective at learning speech to text translation and outperforms the supervised SOTA on CoVoST2 to English translation zero-shot.

We hope Whisper’s high accuracy and ease of use will allow developers to add voice interfaces to a much wider set of applications. Check out the paper, model card, and code to learn more details and to try out Whisper.

@keyframes layer1 {

0% {

transform: rotate(0deg);

opacity: 0.5;

}

50% {

transform: rotate(180deg) scale(0.98);

opacity: 0.44;

}

100% {

transform: rotate(360deg);

opacity: 0.5;

}

}

@keyframes layer2 {

0% {

transform: rotate(0deg);

opacity: 0.5;

}

50% {

transform: rotate(180deg) scale(0.95);

opacity: 0.46;

}

100% {

transform: rotate(360deg);

opacity: 0.5;

}

}

@keyframes layer3 {

0% {

transform: rotate(0deg);

opacity: 0.5;

}

50% {

transform: rotate(180deg) scale(0.97);

opacity: 0.41;

}

100% {

transform: rotate(360deg);

opacity: 0.5;

}

}

@keyframes layer4 {

0% {

transform: rotate(0deg);

opacity: 0.5;

}

50% {

transform: rotate(180deg) scale(0.96);

opacity: 0.46;

}

100% {

transform: rotate(360deg);

opacity: 0.5;

}

}

.demo {

display: grid;

grid-auto-flow: column;

grid-template-columns: auto 1fr;

align-items: center;

grid-gap: 0 32px;

}

.play-button {

position: relative;

width: 54px;

height: 54px;

}

.layers {

opacity: 0.4;

transition: 300ms all ease;

}

.play-button:hover .layers {

opacity: 0.7;

}

.play-button:hover {

opacity: 1;

}

.layer {

background-color: #51DA4C;

width: 100%;

height: 100%;

position: absolute;

border-radius: 50%;

opacity: 0.5;

transition: 500ms all ease;

}

.layer:nth-child(1) {

top: -4px;

left: -4px;

transform-origin: calc(50% + 4px) calc(50% + 4px);

animation: layer1 4500ms linear infinite;

}

.layer:nth-child(2) {

top: 4px;

left: 4px;

transform-origin: calc(50% – 4px) calc(50% – 4px);

animation: layer2 4500ms linear infinite;

}

.layer:nth-child(3) {

top: 4px;

left: -4px;

transform-origin: calc(50% + 4px) calc(50% – 4px);

animation: layer3 4500ms linear infinite;

}

.layer:nth-child(4) {

top: -4px;

left: 4px;

transform-origin: calc(50% – 4px) calc(50% + 4px);

animation: layer4 4500ms linear infinite;

}

.playing .layer {

animation-duration: 2200ms;

}

.play-button__inner {

position: relative;

background-color: #fff;

width: 100%;

height: 100%;

border-radius: 50%;

display: flex;

justify-content: center;

align-items: center;

font-size: 27px;

line-height: 0.5;

color: #51DA4C;

}

.soundwave {

height: 44px;

width: 100%;

mask-image: url(‘https://cdn.openai.com/whisper/draft-20220913a/mask-image-black.svg’);

mask-size: 100% auto;

mask-repeat: no-repeat;

mask-size: 100% auto;

-webkit-mask-image: url(‘https://cdn.openai.com/whisper/draft-20220913a/mask-image-black.svg’);

-webkit-mask-size: 100% auto;

-webkit-mask-repeat: no-repeat;

-webkit-mask-size: 100% auto;

}

.soundwave–unplayed {

background-color: #d6d6d6;

}

.soundwave–played {

background-color: #51DA4C;

position: absolute;

top: 0;

left: 0;

clip-path: polygon(0 0, 0 0, 0 100%, 0 100%);

}

.demo__output {

position: relative;

margin-top: 24px;

background-color: #f2f2f2;

border-radius: 2px;

padding: 10px 16px 9px;

grid-row: 2;

grid-column: 1 / -1;

}

.demo__reveal {

color: #51DA4C;

text-align: center;

width: 100%;

padding: 4px 0 2px;

}

const EXAMPLES = {

‘micromachines’: {

label: ‘Speed talking’,

src: ‘https://cdn.openai.com/whisper/draft-20220913a/micro-machines.wav’,

waveform: ‘https://cdn.openai.com/whisper/draft-20220913a/mask-micro-machines.svg’,

transcript: `This is the Micro Machine Man presenting the most midget miniature motorcade of Micro Machines. Each one has dramatic details, terrific trim, precision paint jobs, plus incredible Micro Machine Pocket Play Sets. There’s a police station, fire station, restaurant, service station, and more. Perfect pocket portables to take any place. And there are many miniature play sets to play with, and each one comes with its own special edition Micro Machine vehicle and fun, fantastic features that miraculously move. Raise the boatlift at the airport marina. Man the gun turret at the army base. Clean your car at the car wash. Raise the toll bridge. And these play sets fit together to form a Micro Machine world. Micro Machine Pocket Play Sets, so tremendously tiny, so perfectly precise, so dazzlingly detailed, you’ll want to pocket them all. Micro Machines are Micro Machine Pocket Play Sets sold separately from Galoob. The smaller they are, the better they are.`,

},

‘k-pop’: {

label: ‘K-Pop’,

src: ‘https://cdn.openai.com/whisper/draft-20220913a/younha.wav’,

waveform: ‘https://cdn.openai.com/whisper/draft-20220913a/mask-image-black.svg’,

transcript: `While darkness was my everything

I ran so hard that I ran out of breath

Never say time’s up

Like the end of the boundary

Because my end is not the end`,

},

‘multilingual’: {

label: ‘French’,

src: ‘https://cdn.openai.com/whisper/draft-20220920a/multilingual.wav’,

waveform: ‘https://cdn.openai.com/whisper/draft-20220920a/multilingual-mask.svg’,

transcript: `Whisper is an automatic speech recognition system based on 680,000 hours of multilingual and multitasking data collected on the Internet. We establish that the use of such a number of data is such a diversity and the reason why our system is able to understand many accents, regardless of the background noise, to understand technical vocabulary and to successfully translate from various languages into English. We distribute as a free software the source code for our models and for the inference, so that it can serve as a starting point to build useful applications and to help progress research in speech processing.`,

},

‘accent’: {

label: ‘Accent’,

src: ‘https://cdn.openai.com/whisper/draft-20220920a/scottish-accent.wav’,

waveform: ‘https://cdn.openai.com/whisper/draft-20220920a/mask-scottish-accent.svg’,

transcript: `One of the most famous landmarks on the Borders, it’s three hills and the myth is that Merlin, the magician, split one hill into three and left the two hills at the back of us which you can see. The weather’s never good though, we stay on the Borders with the mists on the Yildens, we never get the good weather and as you can see today there’s no sunshine, it’s a typical Scottish Borders day.

Note: Whisper transcribed “Eildons” as “Yildens”`

}

}

const root = document.querySelector(‘.js-root’);

const audio = document.querySelector(‘.js-audio’);

const reveal = document.querySelector(‘.js-reveal’);

const playButton = document.querySelector(‘.js-play-button’);

const playIcon = document.querySelector(‘.js-play-icon’);

const pauseIcon = document.querySelector(‘.js-pause-icon’);

const [unplayedSoundWave, playedSoundWave] = Array.from(document.querySelectorAll(‘.js-soundwave’));

const exampleSelect = document.querySelector(‘.js-example-select’);

const output = document.querySelector(‘.js-output’);

let playing = false;

const handlePlay = () => {

playIcon.style.display = ‘none’;

pauseIcon.style.display = ‘block’;

root.classList.add(‘playing’);

}

const handlePause = () => {

playIcon.style.display = ‘block’;

pauseIcon.style.display = ‘none’;

root.classList.remove(‘playing’);

}

playButton.addEventListener(‘click’, () => {

if (playing) {

audio.pause();

playing = false;

handlePause();

} else {

audio.play();

playing = true;

handlePlay();

}

});

audio.addEventListener(‘ended’, () => {

playing = false;

handlePause();

output.innerHTML = EXAMPLES[exampleSelect.value]?.transcript;

reveal.style.display = ‘none’;

});

audio.addEventListener(‘timeupdate’, () => {

const percent = audio.currentTime / audio.duration;

playedSoundWave.style.clipPath = `polygon(0 0, ${percent * 100}% 0, ${percent * 100}% 100%, 0 100%)`;

});

exampleSelect.addEventListener(‘change’, () => {

// Pause the current example

audio.pause();

playing = false;

reveal.style.display = ‘block’;

output.innerText = ”;

// Reset mask

playedSoundWave.style.clipPath = `polygon(0 0, 0 0, 0 100%, 0 100%)`;

// Update the player

audio.src = EXAMPLES[exampleSelect.value].src;

handlePause();

updateMasks();

});

reveal.addEventListener(‘click’, () => {

reveal.style.display = ‘none’;

output.innerHTML = EXAMPLES[exampleSelect.value].transcript;

});

const buildSelect = () => {

const fragment = document.createDocumentFragment();

Object.keys(EXAMPLES).forEach((key) => {

const option = document.createElement(‘option’);

option.value = key;

option.innerText = EXAMPLES[key].label;

fragment.appendChild(option);

});

exampleSelect.appendChild(fragment);

}

const updateMasks = () => {

const value = exampleSelect.value;

[unplayedSoundWave, playedSoundWave].forEach((el) => {

el.style.maskImage = `url(${EXAMPLES[value].waveform})`;

el.style.webkitMaskImage = `url(${EXAMPLES[value].waveform})`;

});

}

buildSelect();

updateMasks();

audio.src = EXAMPLES[exampleSelect.value].src;

root.classList.remove(‘d-none’);