Introducing the GenAI models you haven’t heard of yet

Ever since OpenAI’s ChatGPT set adoption records last winter, companies of all sizes have been trying to figure out how to put some of that sweet generative AI magic to use. In fact, according to Lucidworks’ global generative AI benchmark study released August 10, 96% of executives and managers involved in AI decision processes are…

Read More

Is Generative AI another Watson?

In 2011, IBM’s Watson supercomputer made history by crushing two masters of trivia in a game of “Jeopardy!”. Touting its AI abilities, the company decided to make a substantial investment toward applying its power to solve healthcare. Now, after billions invested and a series of high-profile setbacks, the company is effectively selling Watson and sun-setting…

Read MoreAccelerating Text Generation with Confident Adaptive Language Modeling (CALM)

Posted by Tal Schuster, Research Scientist, Google Research Language models (LMs) are the driving force behind many recent breakthroughs in natural language processing. Models like T5, LaMDA, GPT-3, and PaLM have demonstrated impressive performance on various language tasks. While multiple factors can contribute to improving the performance of LMs, some recent studies suggest that scaling…

Read More

New and Improved Embedding Model

We are excited to announce a new embedding model which is significantly more capable, cost effective, and simpler to use. The new model, text-embedding-ada-002, replaces five separate models for text search, text similarity, and code search, and outperforms our previous most capable model, Davinci, at most tasks, while being priced 99.8% lower. Read documentation Embeddings…

Read MoreWho Said What? Recorder’s On-device Solution for Labeling Speakers

Posted by Quan Wang, Senior Staff Software Engineer, and Fan Zhang, Staff Software Engineer, Google In 2019 we launched Recorder, an audio recording app for Pixel phones that helps users create, manage, and edit audio recordings. It leverages recent developments in on-device machine learning to transcribe speech, recognize audio events, suggest tags for titles, and…

Read More

Google at EMNLP 2022

Posted by Malaya Jules, Program Manager, Google This week, the premier conference on Empirical Methods in Natural Language Processing (EMNLP 2022) is being held in Abu Dhabi, United Arab Emirates. We are proud to be a Diamond Sponsor of EMNLP 2022, with Google researchers contributing at all levels. This year we are presenting over 50…

Read More

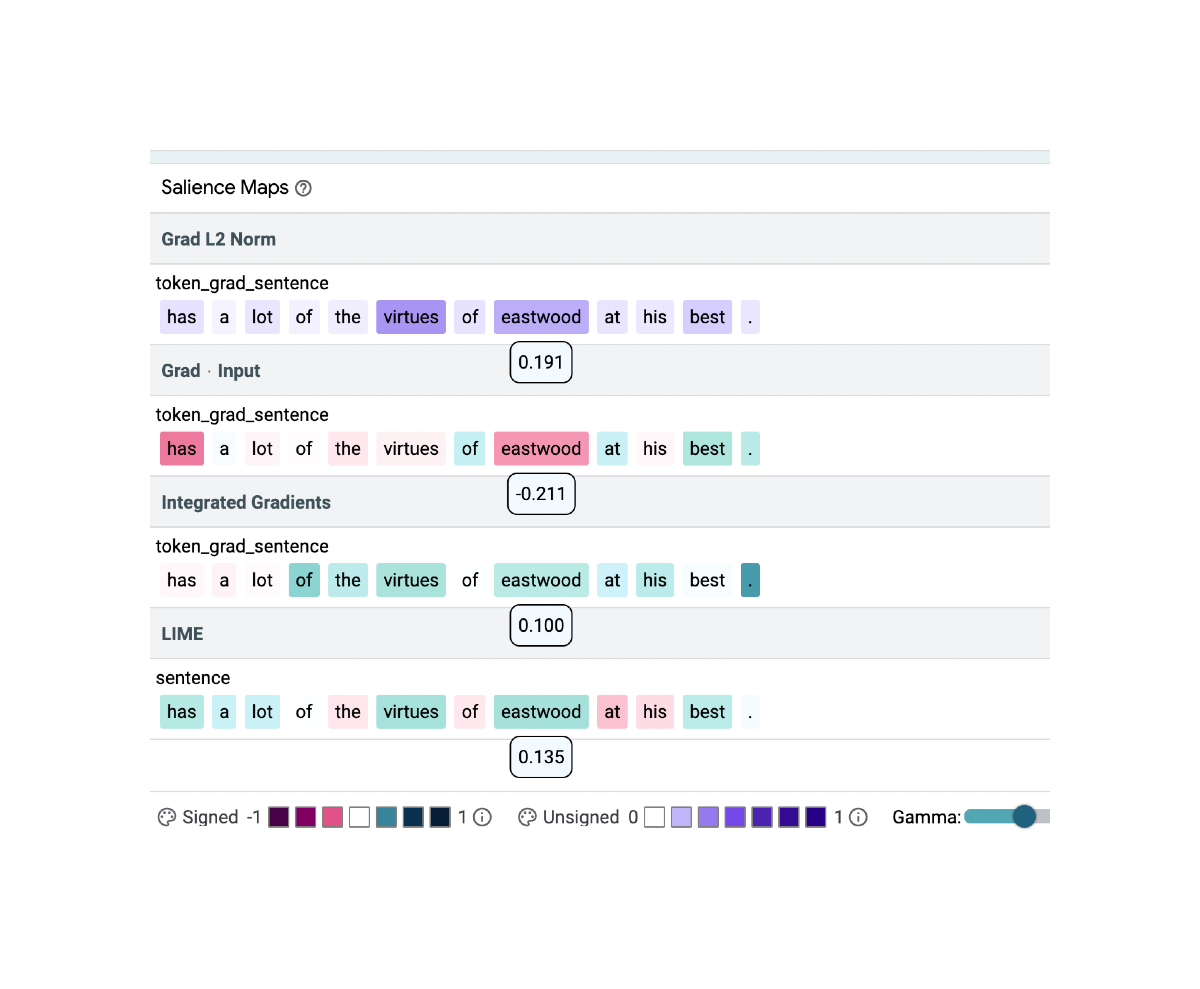

Will You Find These Shortcuts?

Posted by Katja Filippova, Research Scientist, and Sebastian Ebert, Software Engineer, Google Research, Brain team Modern machine learning models that learn to solve a task by going through many examples can achieve stellar performance when evaluated on a test set, but sometimes they are right for the “wrong” reasons: they make correct predictions but use…

Read More

ChatGPT: Optimizing Language Models for Dialogue

We’ve trained a model called ChatGPT which interacts in a conversational way. The dialogue format makes it possible for ChatGPT to answer followup questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests. ChatGPT is a sibling model to InstructGPT, which is trained to follow an instruction in a prompt and provide a detailed…

Read More

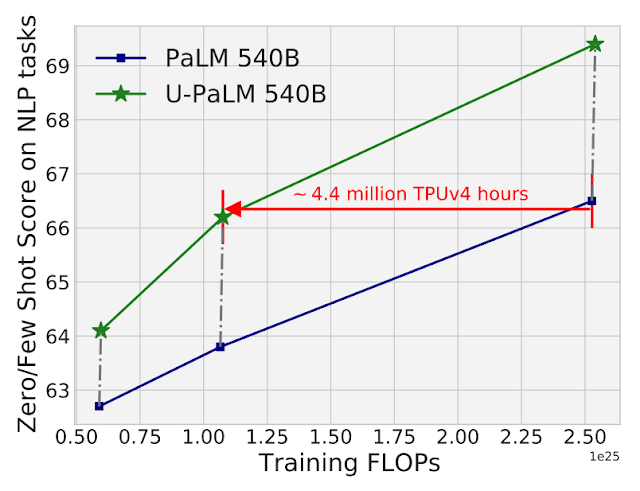

Better Language Models Without Massive Compute

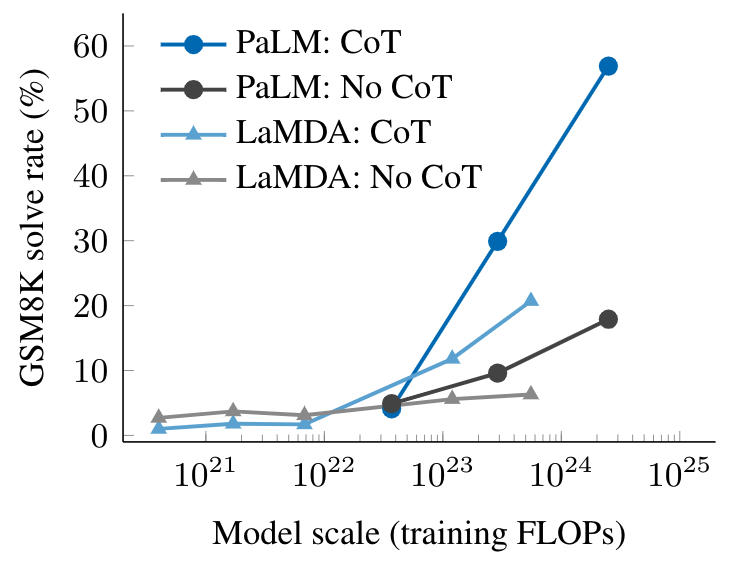

Posted by Jason Wei and Yi Tay, Research Scientists, Google Research, Brain Team In recent years, language models (LMs) have become more prominent in natural language processing (NLP) research and are also becoming increasingly impactful in practice. Scaling up LMs has been shown to improve performance across a range of NLP tasks. For instance, scaling…

Read More

Characterizing Emergent Phenomena in Large Language Models

Posted by Jason Wei and Yi Tay, Research Scientists, Google Research, Brain Team The field of natural language processing (NLP) has been revolutionized by language models trained on large amounts of text data. Scaling up the size of language models often leads to improved performance and sample efficiency on a range of downstream NLP tasks….

Read More- 1

- 2